In the age of artificial intelligence, the path forward can sometimes be unclear. While AI holds great potential and promise for unlocking new innovations, it also uncovers new risks and threats. As AI advancements continue to accelerate, federal officials are viewing AI and approaching its implementation in multiple ways.

Learn about how the Air Force is harnessing AI at the Potomac Officers Club’s 2024 Air Force Summit on July 23!

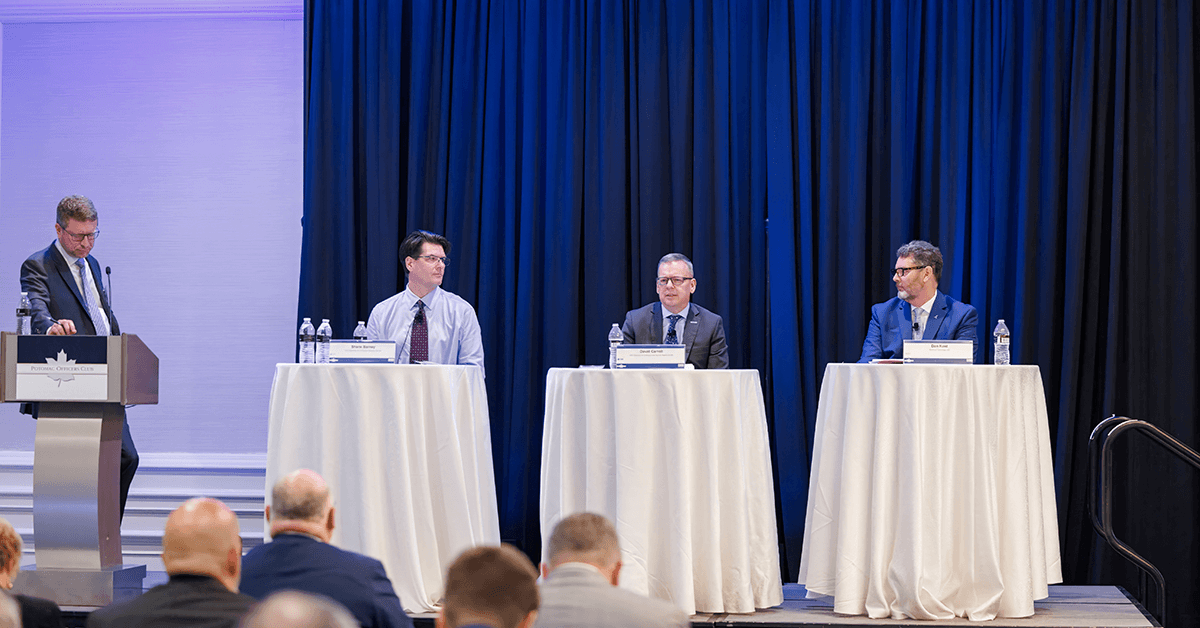

For some government leaders, AI is essential to future innovation. David Carroll, associate director for mission engineering at the Cybersecurity and Infrastructure Security Agency, said AI is critical in making the leap from data to decision.

“Data must become information, information must become action,” he said during a panel discussion at the Potomac Officers Club’s 2024 Cyber Summit. “That jump from information to action in our case has to be accurate for our critical infrastructure and for all of you on the federal side, for all of you on the state, local, tribal side — you always want answers. And that’s what our superpower should be, is to give those reliable answers. We won’t be able to do that with artificial intelligence.”

But other federal officials are concerned about the future impact of AI, especially when combined with other technologies and advancements. Shane Barney, chief information security officer for the U.S. Citizenship and Immigration Services, underscored the importance of having security in place now before future threats have the chance to impact systems.

“I’m worried about generative AI security. I’m actually more worried about what comes after that,” said Barney. “Quantum is going to hit, going to come into the fray at some point. When quantum becomes part of the generative AI discussion, we’re going to be talking about new technology. If we don’t have that base layer of security in place today, there’s no way we’ll be able to handle it.”

Another dimension of the AI implementation conversation is policies. Panelists agreed that AI policies should be able to protect organizations from risk, but they shouldn’t be so restrictive that they put a damper on innovation, especially during this critical juncture in the race for global AI dominance.

“The opportunity here is just enormous,” said Dan Kent, chief technology officer at Red River Technology. “We do not want to get behind other nations by putting too [many] controls on U.S. companies.”

Barney noted that especially for organizations, policies should be driven by the fluid nature of both the threats and the opportunities posed by AI.

“You’ve got to adapt, you’ve got to be able to match the velocity” of the threats, Barney said. “The first thing we’ve got to change about our policies is how do we make our organization more fluid and operational? How do we adapt and change what we need to as new threats come to the horizon?”